|

Personal Blog

|

Published: 2024-08-22 in CogSci || Author: Hans Peter Willems - CEO MIND|CONSTRUCT

|

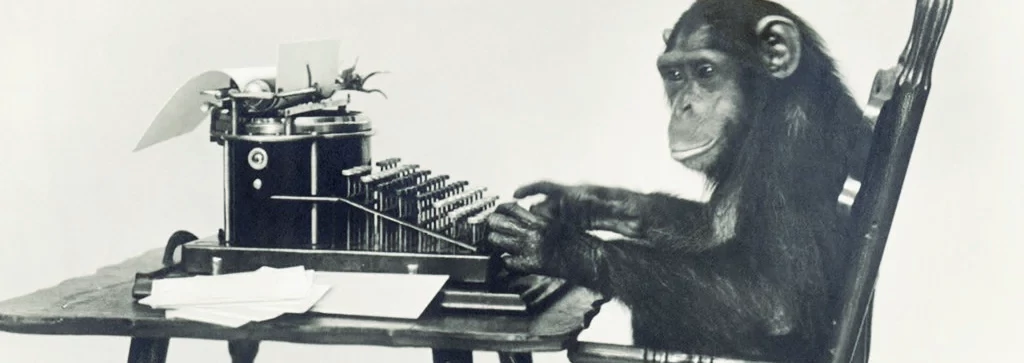

Maybe you have heard of the 'Infinite monkey theorem'. It basically states that, given an infinite amount of time, generating a string of random characters will (almost surely) contain the full works of William Shakespeare. Modern pop-culture added monkeys and typewriters to the theorem, as a means of generating the random string of characters. This theorem is actually mathematically provable. However, a statement by Robert Wilensky says:

This statement is, of course, a funny meme, pointing to the idea that many people on the internet behave like monkeys banging away on typewriters. It also points to the idea that the bulk of text on the internet has the same quality as randomly generated characters. In light of the emergence of Large Language Models, like ChatGPT and other similar systems, this does give food for some critical thought. Large Language Models, or LLMs for short, need vast amounts of textual data to be trained on. Every new iteration of those models is trained on even more data than the previous one. And there is only one place where you can find such enormous amounts of data; on the Internet. This means that these LLMs are being trained with data that is of rather debatable quality, as Robert Wilensky's statement implies. |

A lot of information on the internet is perfectly usable for humans. That is because we humans are capable of critical thought (well, most of us are), and we are therefore capable of separating the wheat from the chaff, so to speak. But LLMs don't have the capability of critical thought while being trained with this data. The idea behind LLMs is that you can somehow build (a kind of) understanding, based on strings of words, without any semantic foundation. It is believed that understanding will somehow emerge. Take note of the 'somehow'. Another well-known meme in the scientific world is a picture showing a complex scientific equation, and somewhere in the middle it says 'and here a miracle happens'. You can't build understanding based on data that needs understanding in the first place. To be able to reason correctly about the world, you require commonsense knowledge. This is not the same as knowing how to create sentences by stringing words together. Commonsense knowledge means to have a validated world-model, and the ability to reason about this validity. The only way to build such commonsense knowledge, is to train a system with quality data. And because such data is not available in the quantities that are needed to train LLMs, they will never be able to build a validated world-model. It comes down to the age-old adagio: Garbage in, garbage out. Of course, the other somewhat important part that is needed, is to have the ability to validate training data by being able to reason about it. Currently, there is no such thing in Large Language Models, and as it stands today, there won't be such a thing in LLMs for quite some time. |

|

About the author: Hans Peter is the founder and CEO of MIND|CONSTRUCT. He has experience with more than 20 programming languages, has over 30 years of experience in IT, and broad expertise in software engineering, software quality assurance, project management and business process engineering. Besides this, Hans Peter has been a serial entrepreneur for more than 30 years now, has worked as a business coach and consultant in many projects and has worked several years as a teacher in the domain of software development. |

| © 2024 MIND|CONSTRUCT |

- 2023-10-04 - A new classification for AI systems - Hans Peter Willems - CEO MIND|CONSTRUCT

- 2018-01-24 - Breakthrough in common-sense knowledge - Dr. Dr. Ir. Gerard Jagers op Akkerhuis

- 2021-03-30 - New research Paper - ASTRID: Bootstrapping Commonsense Knowledge

- 2021-03-12 - New research paper - Self-learning Symbolic AI: A critical appraisal

- 2020-03-23 - ASTRID reaches 50.000 concepts learned

- 2020-02-14 - ASTRID's Deep Inference Engine handles analogous information

- 2019-01-20 - Fuzzy Semantic Relations in the ASTRID system

- 2012-05-09 - Research paper online: Why we need 'Conscious Artificial Intelligence'